We do not have personal opinions or preferences. However, we can provide some general information about the differences between GPT-4 and GPT-3.

GPT-4 was not yet publicly released or even officially announced by OpenAI. Therefore, we cannot provide any detailed information about its features or capabilities. However, based on the previous releases of GPT models, it is likely that GPT-4 will have a larger number of parameters than GPT-3, and therefore, it may be able to generate even more complex and sophisticated text.

On the other hand, GPT-3 is currently the latest publicly available GPT model released by OpenAI in 2020. It has 175 billion parameters, which is significantly more than its predecessor, GPT-2. GPT-3 has demonstrated impressive language capabilities, such as being able to generate coherent and fluent text, answer questions, and even perform simple reasoning tasks. However, like any machine learning model, it still has limitations and biases that need to be addressed.

Overall, while we cannot compare GPT-4 to GPT-3 yet, it is reasonable to assume that GPT-4 will build on the strengths of its predecessor and further advance the field of natural language processing.

Here are a few more details about GPT-3:

GPT-3 is currently the largest and most powerful language model publicly available, with 175 billion parameters. It was trained on a diverse range of texts, including books, articles, and web pages, and can generate human-like text on a wide variety of topics. It has been used for a range of applications, including language translation, chatbots, and text completion.

One of the most impressive features of GPT-3 is its ability to perform few-shot learning. This means that it can quickly adapt to new tasks or contexts with only a few examples, without the need for additional training. For example, it can learn to translate between two languages with only a few example pairs of translated text.

However, despite its many strengths, GPT-3 has some limitations and biases that need to be addressed. For example, it can generate biased or offensive text if it is trained on biased or offensive data. Additionally, it sometimes struggles with common sense reasoning and can generate nonsensical or contradictory responses.

Overall, GPT-3 represents a major milestone in the field of natural language processing and has the potential to transform many aspects of our lives. However, it is important to continue developing and refining these language models to ensure that they are fair, ethical, and trustworthy.

As of our current knowledge (as of March 2023), OpenAI has not officially announced or released GPT-4 yet. Therefore, there is limited information available about its features or capabilities.

However, based on OpenAI’s previous releases of GPT models, it is likely that GPT-4 will have even more parameters than GPT-3, which has 175 billion parameters. This means that it will likely be able to generate even more complex and sophisticated text, and potentially have even more advanced capabilities, such as better natural language understanding, more accurate language translation, or improved text completion.

It is also possible that GPT-4 will address some of the limitations and biases present in earlier models, such as the issue of generating biased or offensive text. However, this is just speculation at this point, and we will have to wait for official announcements and releases from OpenAI to know for sure.

Overall, GPT-4 represents an exciting potential advancement in the field of natural language processing, and many researchers and developers are eagerly anticipating its release.

Microsoft will release the most recent AI chatbot version with the ability to produce videos from straightforward text prompts next week, according to Chat GPT-4.

According to the article, GPT-4, the Large Language Model (LLM) iteration of OpenAI that runs ChatGPT at the moment, should be substantially more potent than GPT-3.5.

OpenAI Introduces Chat GPT-4, a Photo-Compatible AI

According to a research blog post, OpenAI today released GPT-4, the next-generation AI language model that can interpret photographs and describe what’s in them.

The world has been captivated by Chat GPT-3, but the deep learning language model only supported text inputs until recently. GPT-4 will also accept picture prompts.

It produces text outputs from inputs that contain both interspersed text and graphics, according to a recent article by OpenAI. GPT-4 “displays identical capabilities as it does on text-only inputs over a range of domains, including documents containing text and photos, diagrams, or screenshots.”

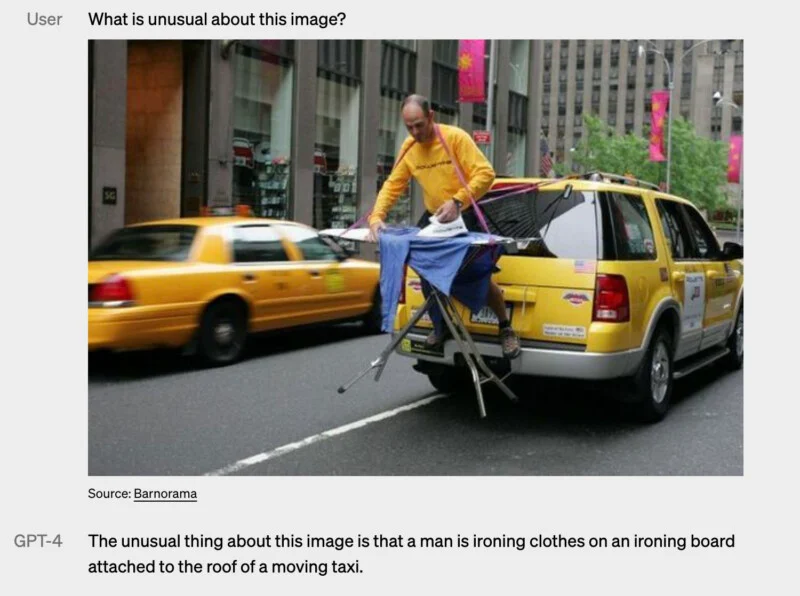

What this means in practice is that the AI chatbot will be able to analyze what is in an image. For example, it can tell the user what is unusual about the below photo of a man ironing his clothes while attached to a taxi.

In real life, this means that the AI chatbot will be able to assess the contents of an image. As an illustration, it can explain to the user what odd about the image below of a man ironing his clothing while linked to a taxi is.

Andreas Braun, chief technical officer for Microsoft Germany, stated last week that GPT-4 will “provide completely other possibilities, like films.”

Nevertheless, according to today’s release, GPT-4 does not mention video, and the sole multimodal component is the input of photos, which is far less than anticipated.

Kosmos-1, a multi-modal language model from Microsoft that works with several formats, has previously been introduced.

The AI in the Kosmos-1 presentation can read pictures in addition to photos. For instance, the AI is asked, “What time is it now?” after receiving a picture of a clock displaying the time as 10:10. The AI responds, “10:10 on a gigantic clock,” to that.